Today, creating a 3D realtime experience requires huge efforts in asset generation. Most of the time, 3D artists create 3D models from scratch. They’re using their imagination and/or existing material as pictures, videos, CAD models or point clouds. The final goal is to produce data that can be easily handled by real time engines: optimized and textured meshes. No data is ready for a real time experience: pictures are 2D and require pure 3D creation, CAO model require huge optimization and point clouds require turning a specific data point into light-weighted meshes with semantic.

The point is 3D immersive devices are blooming but production process is not growing that fast. We are still using the same old process we used to years ago.If we exclude pure environment invention like in a video games, we have to admit that using 3D captured reality is not the easy process it should be.

On one side, we see the rise of new technologies that allow capturing reality: 3D laser scanner, new depth sensors as provided with the Kinect (time of flight) and the new Project Kinect for Azure, photogrammetry or videogrammetry softwares that are using 2D inputs, stereoscopic camera, AR algorithms as ARCore or ARkit dealing with SLAM to give a better juxtaposition of virtual and real. This approach is also used in AR hands-free devices as Hololens or Meta glasses, as it generates on the fly a 3D environment mesh fitting the reality.

Matterport brings an easy to scan approach with its cam that lets anybody create VR visit, mixing 360 pictures with generated 3D.We also had a very interesting experience with the ZED cam allowing low cost and long range scan, very efficient with the open source project RTAB-MAP and its closure loop detection.

All this is very promising, a lot of contents are created this way, take a look at the 3D scan library of Sketchfab.

3D scan for cultural heritage

But the difficulty lies into the transformation of those captured data into ready to use data for immersive experience. The raw data resulting for all the capture process is point clouds. But for many reasons, this type of data is not correctly managed by render engine. So we’re still dealing with operations that are turning point clouds into polygons.

Two ways to proceed:

– a 3D artist can “retopoligize”, that means he will use the point clouds as a guideline for modelization. It’s a long and costly process.

– Using meshification algorithm as Poisson surface reconstruction: the result is far from perfect

Whatever option you’re choosing – a meshification algorithm or teaming with 3D artists -, the result will not be exact.

First, because a polygon is already an approximation between 3 vertices, so in some ways, the 3D artist or the algorithm has to approximate the surface when linking the points. Of course, the 3D artist is doing better than the algorithm in that case. Moreover, he can add semantic to the meshes – that can be considered as polygon soup at this stage: he can define that this object is, for instance, a car, information that can be used in the virtual experience. Algorithm can’t. Even if machine learning with classification algorithm is making huge process.

3D scan for cultural heritage

But the difficulty lies into the transformation of those captured data into ready to use data for immersive experience. The raw data resulting for all the capture process is point clouds. But for many reasons, this type of data is not correctly managed by render engine. So we’re still dealing with operations that are turning point clouds into polygons.

Two ways to proceed:

– a 3D artist can “retopoligize”, that means he will use the point clouds as a guideline for modelization. It’s a long and costly process.

– Using meshification algorithm as Poisson surface reconstruction: the result is far from perfect

Whatever option you’re choosing – a meshification algorithm or teaming with 3D artists -, the result will not be exact.

First, because a polygon is already an approximation between 3 vertices, so in some ways, the 3D artist or the algorithm has to approximate the surface when linking the points. Of course, the 3D artist is doing better than the algorithm in that case. Moreover, he can add semantic to the meshes – that can be considered as polygon soup at this stage: he can define that this object is, for instance, a car, information that can be used in the virtual experience. Algorithm can’t. Even if machine learning with classification algorithm is making huge process.

BIM/3D scan, real time and VR

So, there may be a shortcut: why not considering using the raw data as is for immersive experience?

Of course, point clouds, unlike meshes, are not completely filled. But the data is exact, meaning that users can make exact interactions, as reliable measurements, for instance. As he can do IRL. Moreover, some new approaches bring improvements, as “spatting” that can fill the holes of point cloud for better visualization. This should be better, as 3D scanner resolution is continually improving.

The same method may be investigated for the CAD world. CAD based VR experiences are not instantly created too because CAD models are too heavy for real time render engine. 3D artists or optimization software can help with polygons decimations, jacketing, hidden removal, etc. But using point cloud rendering mode can help relieve the rendering pipeline, as rendering points is cheaper than rendering triangles.

As reality capture keeps being adopted in different industries: BIM, cultural heritage, energy, … The point clouds are already used and that’s just a beginning. Data are processed in softwares that require a specific knowledge and that are not aimed towards real time experience. In a way, those softwares can be compared to CAD software: perfect for computing the point cloud, but not ready for generating lifelike experiences.

At Octarina, because we had more and more requests from partners that want to be naturally immerged into distant captured realities without a painful process, we developed a specific software component: CloudXP to bring point clouds right into immersive applications.

BIM/3D scan, real time and VR

So, there may be a shortcut: why not considering using the raw data as is for immersive experience?

Of course, point clouds, unlike meshes, are not completely filled. But the data is exact, meaning that users can make exact interactions, as reliable measurements, for instance. As he can do IRL. Moreover, some new approaches bring improvements, as “spatting” that can fill the holes of point cloud for better visualization. This should be better, as 3D scanner resolution is continually improving.

The same method may be investigated for the CAD world. CAD based VR experiences are not instantly created too because CAD models are too heavy for real time render engine. 3D artists or optimization software can help with polygons decimations, jacketing, hidden removal, etc. But using point cloud rendering mode can help relieve the rendering pipeline, as rendering points is cheaper than rendering triangles.

As reality capture keeps being adopted in different industries: BIM, cultural heritage, energy, … The point clouds are already used and that’s just a beginning. Data are processed in softwares that require a specific knowledge and that are not aimed towards real time experience. In a way, those softwares can be compared to CAD software: perfect for computing the point cloud, but not ready for generating lifelike experiences.

At Octarina, because we had more and more requests from partners that want to be naturally immerged into distant captured realities without a painful process, we developed a specific software component: CloudXP to bring point clouds right into immersive applications.

Insurance/forensic investigation: using real time point clouds for natural and intuitive interaction

With this component added to their projects, applications developers can create their own VR/real time applications and easily add captured realities whatever the size – one of the main issue with point clouds is data is so huge it can’t be handled by real time render engine. This is the reason why we developed CloudXP. For example, just imagine you can add an environment instantly after 3D capture and experience a VR training.

It’s different from other technologies as potree, that is a very efficient at streaming and sharing large point clouds into a browser. But we can’t exactly call it real time, it’s more something of a dynamic level of details, very useful but not designed to give a fully continuous cognitive real time experience as in video game, with a reduced perception-action loop.

Insurance/forensic investigation: using real time point clouds for natural and intuitive interaction

With this component added to their projects, applications developers can create their own VR/real time applications and easily add captured realities whatever the size – one of the main issue with point clouds is data is so huge it can’t be handled by real time render engine. This is the reason why we developed CloudXP. For example, just imagine you can add an environment instantly after 3D capture and experience a VR training.

It’s different from other technologies as potree, that is a very efficient at streaming and sharing large point clouds into a browser. But we can’t exactly call it real time, it’s more something of a dynamic level of details, very useful but not designed to give a fully continuous cognitive real time experience as in video game, with a reduced perception-action loop.

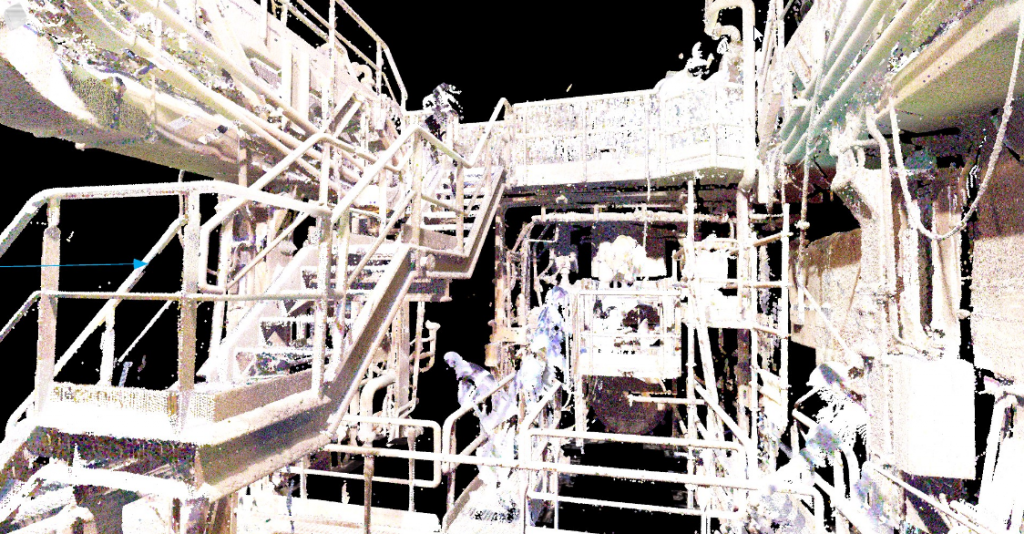

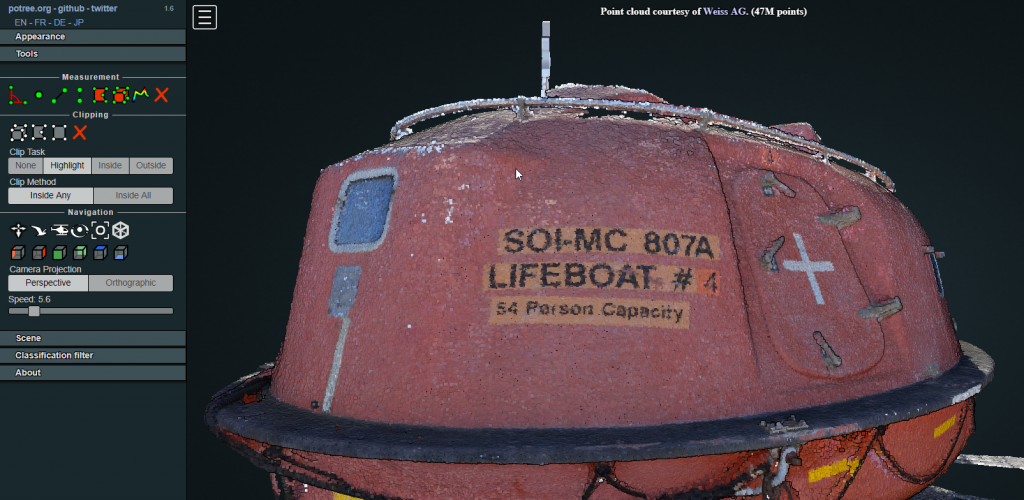

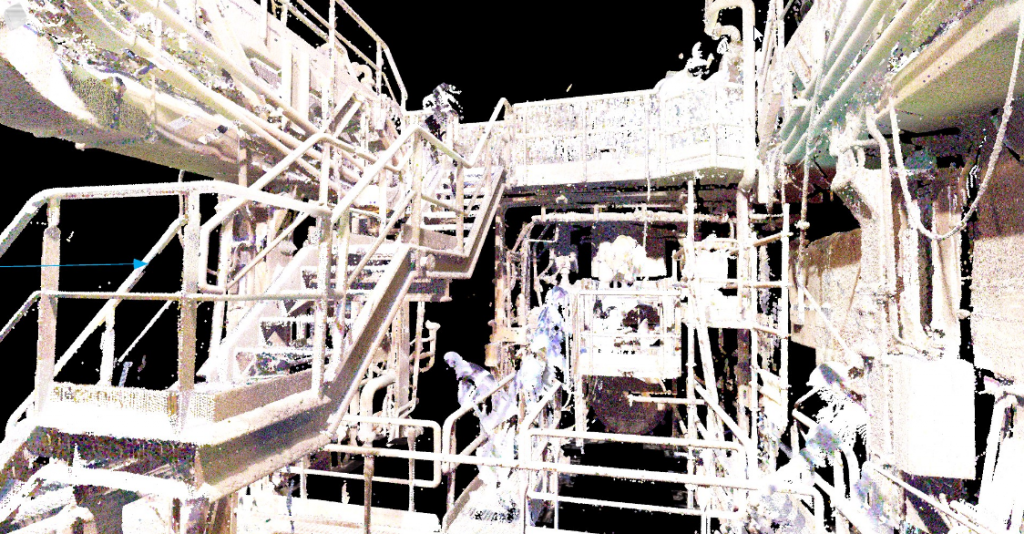

Potree allows display and interaction with large point clouds in browsers

Our component provides instant- fully-points-populated images, using scene organization derived from octree.

We had the opportunity to work with different companies in various contexts as Vinci for learning purpose, here is a live footage of user experience:

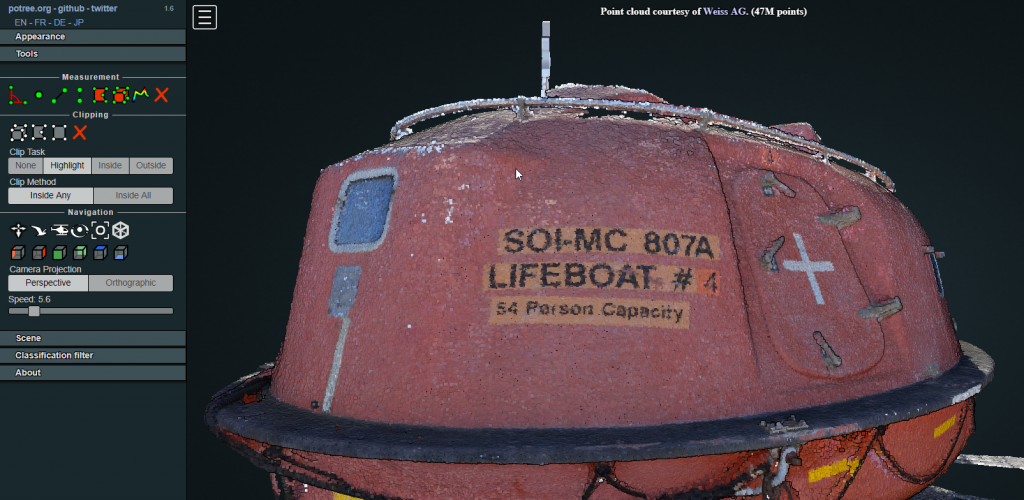

Cloud XP used for training purpose

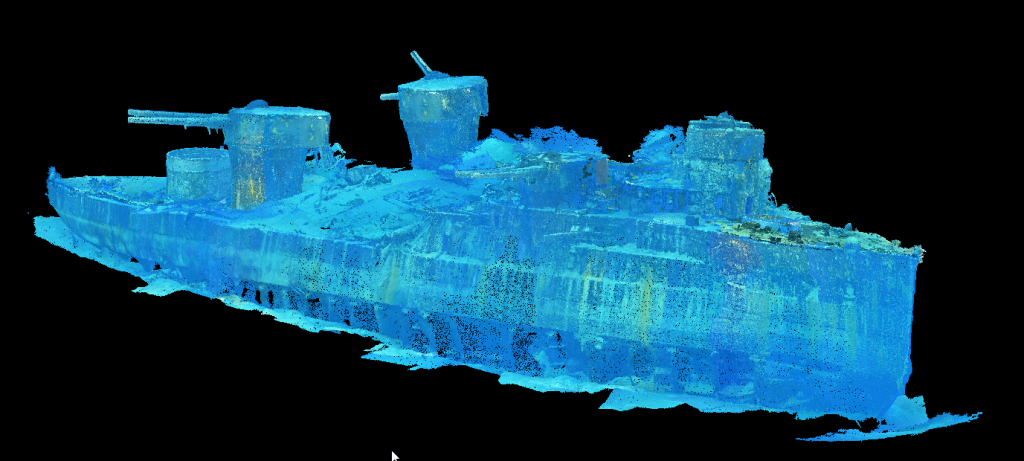

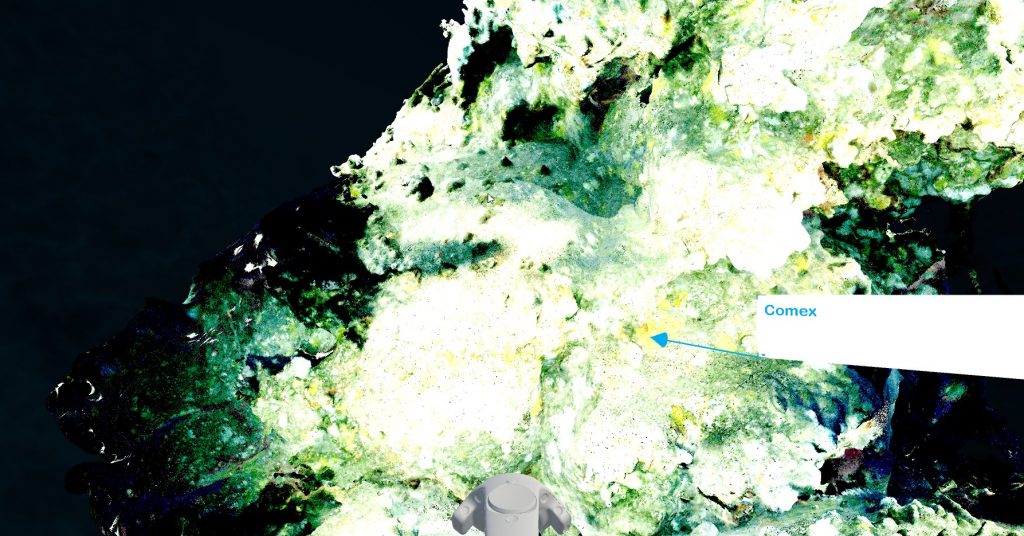

The Comex or Ipso Facto for real time immersion into deep subsea environments. The following examples immerged user into unreachable places.

Potree allows display and interaction with large point clouds in browsers

Our component provides instant- fully-points-populated images, using scene organization derived from octree.

We had the opportunity to work with different companies in various contexts as Vinci for learning purpose, here is a live footage of user experience:

Cloud XP used for training purpose

The Comex or Ipso Facto for real time immersion into deep subsea environments. The following examples immerged user into unreachable places.

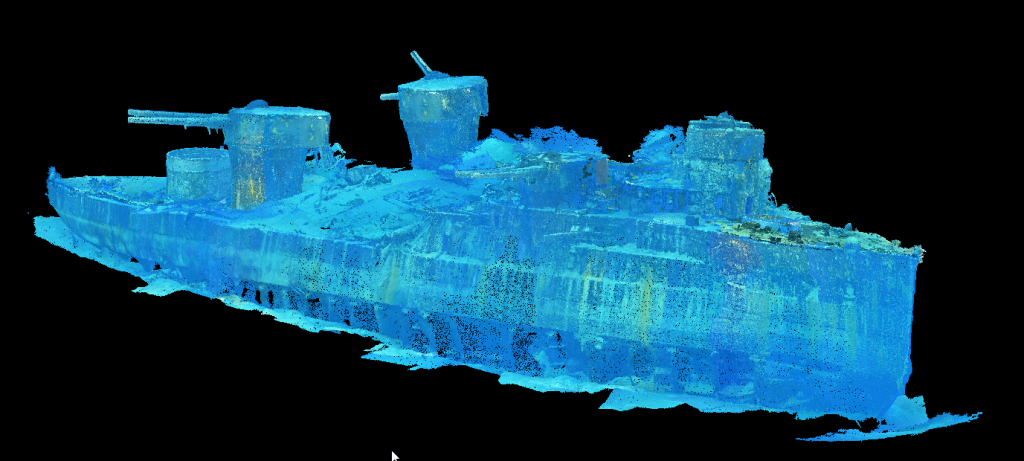

The Danton French battleship (Ipso Facto), VR real time experience

The Danton French battleship (Ipso Facto), VR real time experience

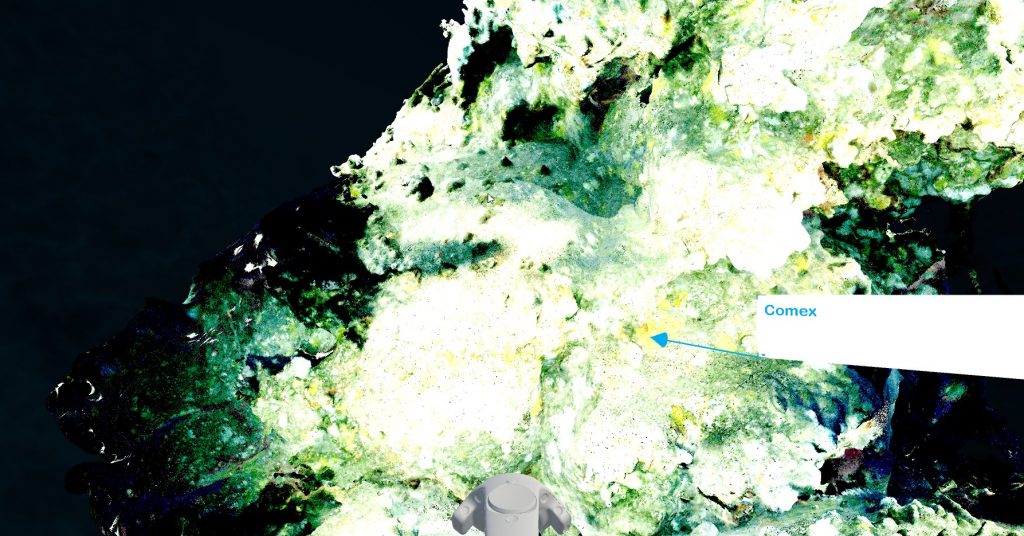

Coral evolution (Comex), VR real time experience

We also had the opportunity to do some research with companies as EDF (and Daniel Giraudeau-Montaut, the creator of the popular CloudCompare software) that wanted to use sensitive captured context for simulation purposes, involving 3D model and collision detection point cloud/mesh

At the end of the day, the main needs we address are:

Coral evolution (Comex), VR real time experience

We also had the opportunity to do some research with companies as EDF (and Daniel Giraudeau-Montaut, the creator of the popular CloudCompare software) that wanted to use sensitive captured context for simulation purposes, involving 3D model and collision detection point cloud/mesh

At the end of the day, the main needs we address are:

3D scan for cultural heritage

But the difficulty lies into the transformation of those captured data into ready to use data for immersive experience. The raw data resulting for all the capture process is point clouds. But for many reasons, this type of data is not correctly managed by render engine. So we’re still dealing with operations that are turning point clouds into polygons.

Two ways to proceed:

– a 3D artist can “retopoligize”, that means he will use the point clouds as a guideline for modelization. It’s a long and costly process.

– Using meshification algorithm as Poisson surface reconstruction: the result is far from perfect

Whatever option you’re choosing – a meshification algorithm or teaming with 3D artists -, the result will not be exact.

First, because a polygon is already an approximation between 3 vertices, so in some ways, the 3D artist or the algorithm has to approximate the surface when linking the points. Of course, the 3D artist is doing better than the algorithm in that case. Moreover, he can add semantic to the meshes – that can be considered as polygon soup at this stage: he can define that this object is, for instance, a car, information that can be used in the virtual experience. Algorithm can’t. Even if machine learning with classification algorithm is making huge process.

3D scan for cultural heritage

But the difficulty lies into the transformation of those captured data into ready to use data for immersive experience. The raw data resulting for all the capture process is point clouds. But for many reasons, this type of data is not correctly managed by render engine. So we’re still dealing with operations that are turning point clouds into polygons.

Two ways to proceed:

– a 3D artist can “retopoligize”, that means he will use the point clouds as a guideline for modelization. It’s a long and costly process.

– Using meshification algorithm as Poisson surface reconstruction: the result is far from perfect

Whatever option you’re choosing – a meshification algorithm or teaming with 3D artists -, the result will not be exact.

First, because a polygon is already an approximation between 3 vertices, so in some ways, the 3D artist or the algorithm has to approximate the surface when linking the points. Of course, the 3D artist is doing better than the algorithm in that case. Moreover, he can add semantic to the meshes – that can be considered as polygon soup at this stage: he can define that this object is, for instance, a car, information that can be used in the virtual experience. Algorithm can’t. Even if machine learning with classification algorithm is making huge process.

BIM/3D scan, real time and VR

So, there may be a shortcut: why not considering using the raw data as is for immersive experience?

Of course, point clouds, unlike meshes, are not completely filled. But the data is exact, meaning that users can make exact interactions, as reliable measurements, for instance. As he can do IRL. Moreover, some new approaches bring improvements, as “spatting” that can fill the holes of point cloud for better visualization. This should be better, as 3D scanner resolution is continually improving.

The same method may be investigated for the CAD world. CAD based VR experiences are not instantly created too because CAD models are too heavy for real time render engine. 3D artists or optimization software can help with polygons decimations, jacketing, hidden removal, etc. But using point cloud rendering mode can help relieve the rendering pipeline, as rendering points is cheaper than rendering triangles.

As reality capture keeps being adopted in different industries: BIM, cultural heritage, energy, … The point clouds are already used and that’s just a beginning. Data are processed in softwares that require a specific knowledge and that are not aimed towards real time experience. In a way, those softwares can be compared to CAD software: perfect for computing the point cloud, but not ready for generating lifelike experiences.

At Octarina, because we had more and more requests from partners that want to be naturally immerged into distant captured realities without a painful process, we developed a specific software component: CloudXP to bring point clouds right into immersive applications.

BIM/3D scan, real time and VR

So, there may be a shortcut: why not considering using the raw data as is for immersive experience?

Of course, point clouds, unlike meshes, are not completely filled. But the data is exact, meaning that users can make exact interactions, as reliable measurements, for instance. As he can do IRL. Moreover, some new approaches bring improvements, as “spatting” that can fill the holes of point cloud for better visualization. This should be better, as 3D scanner resolution is continually improving.

The same method may be investigated for the CAD world. CAD based VR experiences are not instantly created too because CAD models are too heavy for real time render engine. 3D artists or optimization software can help with polygons decimations, jacketing, hidden removal, etc. But using point cloud rendering mode can help relieve the rendering pipeline, as rendering points is cheaper than rendering triangles.

As reality capture keeps being adopted in different industries: BIM, cultural heritage, energy, … The point clouds are already used and that’s just a beginning. Data are processed in softwares that require a specific knowledge and that are not aimed towards real time experience. In a way, those softwares can be compared to CAD software: perfect for computing the point cloud, but not ready for generating lifelike experiences.

At Octarina, because we had more and more requests from partners that want to be naturally immerged into distant captured realities without a painful process, we developed a specific software component: CloudXP to bring point clouds right into immersive applications.

Insurance/forensic investigation: using real time point clouds for natural and intuitive interaction

With this component added to their projects, applications developers can create their own VR/real time applications and easily add captured realities whatever the size – one of the main issue with point clouds is data is so huge it can’t be handled by real time render engine. This is the reason why we developed CloudXP. For example, just imagine you can add an environment instantly after 3D capture and experience a VR training.

It’s different from other technologies as potree, that is a very efficient at streaming and sharing large point clouds into a browser. But we can’t exactly call it real time, it’s more something of a dynamic level of details, very useful but not designed to give a fully continuous cognitive real time experience as in video game, with a reduced perception-action loop.

Insurance/forensic investigation: using real time point clouds for natural and intuitive interaction

With this component added to their projects, applications developers can create their own VR/real time applications and easily add captured realities whatever the size – one of the main issue with point clouds is data is so huge it can’t be handled by real time render engine. This is the reason why we developed CloudXP. For example, just imagine you can add an environment instantly after 3D capture and experience a VR training.

It’s different from other technologies as potree, that is a very efficient at streaming and sharing large point clouds into a browser. But we can’t exactly call it real time, it’s more something of a dynamic level of details, very useful but not designed to give a fully continuous cognitive real time experience as in video game, with a reduced perception-action loop.

Potree allows display and interaction with large point clouds in browsers

Our component provides instant- fully-points-populated images, using scene organization derived from octree.

We had the opportunity to work with different companies in various contexts as Vinci for learning purpose, here is a live footage of user experience:

Cloud XP used for training purpose

The Comex or Ipso Facto for real time immersion into deep subsea environments. The following examples immerged user into unreachable places.

Potree allows display and interaction with large point clouds in browsers

Our component provides instant- fully-points-populated images, using scene organization derived from octree.

We had the opportunity to work with different companies in various contexts as Vinci for learning purpose, here is a live footage of user experience:

Cloud XP used for training purpose

The Comex or Ipso Facto for real time immersion into deep subsea environments. The following examples immerged user into unreachable places.

The Danton French battleship (Ipso Facto), VR real time experience

The Danton French battleship (Ipso Facto), VR real time experience

Coral evolution (Comex), VR real time experience

We also had the opportunity to do some research with companies as EDF (and Daniel Giraudeau-Montaut, the creator of the popular CloudCompare software) that wanted to use sensitive captured context for simulation purposes, involving 3D model and collision detection point cloud/mesh

At the end of the day, the main needs we address are:

Coral evolution (Comex), VR real time experience

We also had the opportunity to do some research with companies as EDF (and Daniel Giraudeau-Montaut, the creator of the popular CloudCompare software) that wanted to use sensitive captured context for simulation purposes, involving 3D model and collision detection point cloud/mesh

At the end of the day, the main needs we address are:

- Shortening costs and production cycle

- Being independent from the devices used for the capture, format agnostic

- Interact with heavy set of data with a reduced perception/reaction loop

- Not being a specialist in processing point clouds and having a natural and intuitive real time experience in captured realities – whatever the size of the point clouds

- Comparing two different situations for instance the « as designed » 3D (CAD models) and the « as built » result (3D scan)

- Instant and distant collaboration

- Collision detection

- Applicative features with among the most obvious: display of photospheres, notes, 3D selection, mesh importation and manipulation, point of interests, measurements …